What if being late to a meeting sparked your next big project?

After our quarterly release, I decided to join an Amazon Q Info Session hosted by a colleague. I was a few minutes late—by the time I joined, they were already deep into installing Amazon Q on the CLI and the VS Code Extension.

At first, I was just following along. We experimented with simple commands, built a snake game, a car game, even generated unit tests. But in the back of my mind, one question kept echoing:

“What if this CLI could power automation—or even integrate into CI/CD pipelines?”

The CLI felt natural, almost like running a git command—seamless and fast. By the end of the session, everyone shared their mini-projects. I tried creating an end-to-end UI test but ran into authentication issues. No polished demo from me that day—but I left buzzing with ideas.

Usually, I lean on ChatGPT, but Amazon Q felt… different. The integration felt smoother, more developer-native. That curiosity led me to open my UI repository, start prompting—and that’s where Git Analytics was born.

The Mistakes That Taught Me Discipline

After hours of prompting and refining, I finally got close to the results I wanted. Then I made a mistake—the working version broke, and worse, I accidentally terminated my terminal session.

All context was lost. I had to start over.

It was painful, not just because of time lost, but because I couldn’t reproduce the exact same output.

That’s when I learned an invaluable lesson:

treat AI experiments like software. Use version control.

I began creating a new branch for each iteration. Whenever a prompt produced a working result, I tested it, committed it, and used the goal of the prompt as the commit message.

Then I discovered Amazon Q’s /save and /load commands.

They preserve your entire chat context, so you can safely resume later. Combined with Git branches, it meant I could always pick up where I left off—both in my code and in my AI’s “memory.”

Version control for your code, context control for your AI.

That combo changed everything.

Learning to Collaborate With AI

In the beginning, I prompted Amazon Q step by step—painstakingly exploring each result. It worked, but it was slow.

Eventually, I shifted from imperative to declarative thinking.

Instead of telling Amazon Q what to do, I told it what I wanted to achieve.

That single change transformed the workflow. Amazon Q began generating entire drafts that matched my end goal. I refined them with my own wireframes and ideas—turning the process into real collaboration.

AI provided the muscle. I provided the direction.

It reminded me of working in a dev team: you can build things alone, but collaboration multiplies creativity. The best results came when I led the vision and let the AI amplify it.

When the Tool Outgrew Its Creator

As Git Analytics grew more complex, I noticed Amazon Q started getting confused—forgetting older functionalities or breaking things that once worked.

That’s when it hit me: AI tools don’t replace developers—they accelerate them.

Amazon Q thrived in smaller contexts but struggled when juggling large, interdependent systems. I had to step in, debug manually, and guide it back on track. My development background suddenly became the compass the AI needed.

Without my dev instincts, I couldn’t steer the AI—only follow it.

And that realization reshaped how I thought about these tools. They’re powerful, but only in the hands of someone who knows how to guide them.

Why Git Analytics Matters

Without metrics, we’re blind.

We can’t improve what we can’t see.

That goes against one of software engineering’s core principles: continuous improvement.

Git Analytics gives visibility—turning everyday Git data into meaningful insights.

It’s a multi-repository dockerized dashboard that lives outside your repository

No backend, no setup. Just drop it in your project root and open it in your browser.

It’s insight without friction.

🧩 Get Your Own Copy of Git Analytics

Want to explore your team’s delivery rhythm, collaboration patterns, and test coverage—all directly from Git?

🎁 Download Git Analytics for Free

You’ll get:

- A ready-to-use multi-repository dockerized dashboard

- Step-by-step setup guide

💡 Join me using Git Analytics to uncover our team’s unseen stories hidden in commits.

What the Data Revealed

👥 Contributor Tab

At first, it just showed lines of code per developer. But soon, it revealed patterns—months of peak activity, quieter stretches, and each teammate’s rhythm of contribution.

It became a living snapshot of collaboration.

🌙 After-Hours Tab

This one started with a simple question: Are we working too much outside business hours?

By tracking commits made at night or on weekends, the tab highlights work-life balance and potential burnout.

You can even click a ticket to open its corresponding Jira issue and see the story behind the overtime.

🔍 Peer Review Tab

A mirror of teamwork. It shows who reviewed the most PRs, how reviews were distributed, and when collaboration peaked.

The insights helped balance review loads and promote fairness across the team.

⚙️ Features & Bug Fixes Tab

This view traces every feature and fix, month by month. You can see when the team focused on innovation versus stabilization.

It’s not just metrics—it’s a timeline of progress.

🧪 Test Coverage Tab

Too often, tickets close without tests. This tab exposes them.

It tracks which commits lack test files and how our coverage improves each year.

It’s not about chasing numbers—it’s about accountability and quality.

⏱ Lead Time to Merge

This one surprised me. I thought it was just a performance metric, but it turned out to be a pulse check on our process.

Short lead times reflected smooth collaboration. Longer ones hinted at bottlenecks—slow reviews, delayed testing, or context switching.

It helped us ask the right questions:

- Are we improving our development flow?

- Do larger features take longer to merge?

- Did our new CI setup make a difference?

Lead time isn’t just about speed—it’s about flow. It’s the rhythm of your team’s progress.

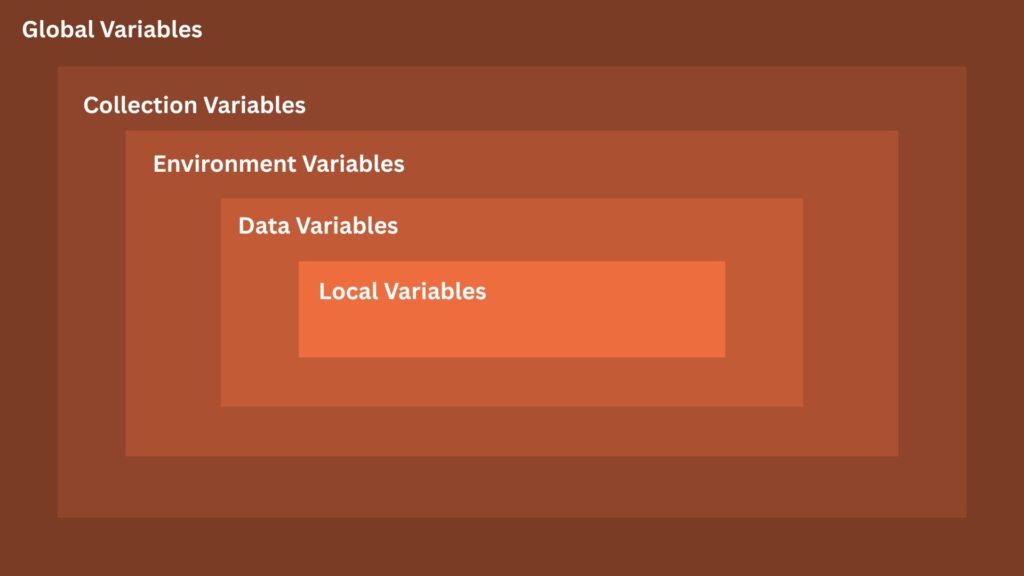

⚠️ Knowledge Risk

Imagine you’re exploring a repository and notice that one developer seems to have written almost all the code for a key module. At first, it might seem impressive — someone really mastered that part of the system.

But then you realize something deeper: if that person leaves or becomes unavailable, the team could struggle to maintain or update the module. The knowledge of how things work lives mostly in one person’s head — and that’s a risk.

The Knowledge Risk tab helps you spot this kind of situation. It shows how coding knowledge is distributed across contributors, highlighting areas where one person holds most of the expertise.

By revealing these “single points of failure,” the tab encourages teams to share ownership, review each other’s work, and build continuity — so the project stays strong, no matter who’s around.

Things to be aware of

To get the best results and accurate data in the Peer Review, Features & Bug Fixes, Test Coverage, and Lead Time to Merge tabs, adjust the config.js settings.

For easier commit extraction by Git Analytics, follow the commit message format described in “3 Things I Include in a Commit Message That Others Don’t.”

Key Takeaway

AI tools like Amazon Q are productivity multipliers.

They don’t replace creativity or expertise—they amplify it.

Without Amazon Q, Git Analytics might have taken months. But beyond speed, what mattered most was what it revealed about collaboration—between humans, and between humans and AI.

The future of software engineering isn’t humans versus AI.

It’s humans who know how to guide it.

That’s where Git Analytics was born—not from a plan, but from curiosity, mistakes, and the drive to understand the stories hidden in our commits.

💡 Want to Explore Your Team’s Metrics Instantly?

Discover what your commits say about your team’s flow, balance, and growth.